Will engineers still be needed?

Let’s be real – when OpenAI unleashed their AI model on November 30, 2022, just before the Advent of Code 2022 kicked off, I was immediately impressed. It could already churn out little code snippets, especially for those mind-numbing, repetitive tasks like string parsing. Honestly, I was pleasantly surprised by the initial capabilities – it felt like a genuinely helpful assistant. But I quickly realized it wasn’t a silver bullet. There were lots of mistakes in the generated code, and I saw it as a tool to augment my work, not replace it.

Fast forward a few years, and the landscape has shifted dramatically. We’re seeing a relentless stream of updates to Large Language Models (LLMs). Models like Deepseek are now outperforming ChatGPT in terms of size, Qwen distilled is competing with Llamacoder and Mistral, and Claude is dominating – all while big players like Google (with Gemma and Gemini – formerly Bard) and see the amount of open source models found on Hugging Face are pushing the boundaries. It’s clear that the quality of programming code, generated by these models is constantly improving. And we haven´t seen the end of the improvements yet!

But the question remains: how long will it take developers to adapt and embrace these changes?

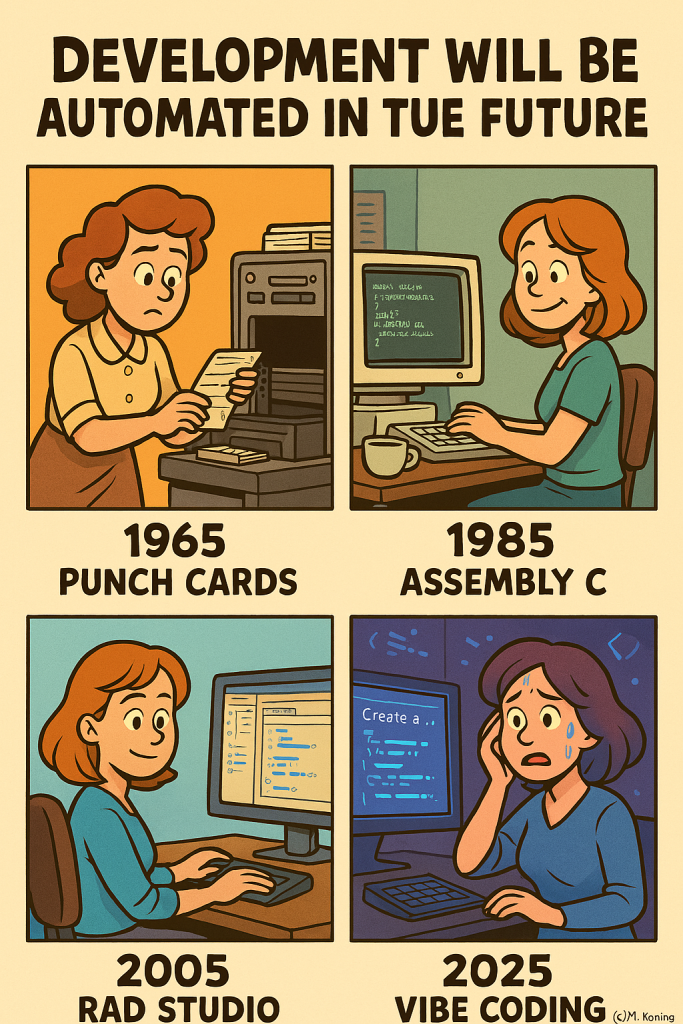

The truth is, our work has always been evolving. As far as I remember, the tech industry has always been in a constant state of flux. So, the idea that developers will suddenly need to become ‘broader engineers’ – that’s not entirely new. It’s just a continuation of a trend that’s been happening for decades. However..

Will Engineers be “replaced” by AI?

I doubt (a reference to: On the foolishness of “natural language programming” by E.W. Dijkstra).

In order to make machines significantly easier to use, it has been proposed (to try) to design machines that we could instruct in our native tongues. this would, admittedly, make the machines much more complicated, but, it was argued, by letting the machine carry a larger share of the burden, life would become easier for us. It sounds sensible provided you blame the obligation to use a formal symbolism as the source of your difficulties. But is the argument valid? I doubt.

(from: On the foolishness of “natural language programming”, by E.W. Dijkstra)

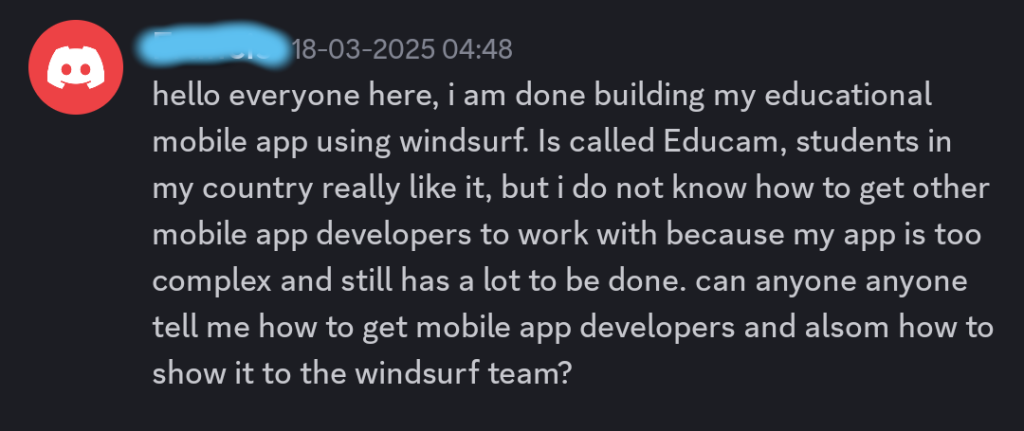

Especially if you see people who generated some nice successful software start asking for… actual developers, like this request I found on a discord channel of WindSurf IDE:

Ok, so you build a nice app using AI, but now the code is too complex to update and maintain it? Cause you build it only using.. an engine with prompts, and now you got stuck. Well ehm…..

The truth is: The LLMs are (at time of writing) still just parroting as best as they can, and that means the code that is spit out by the engines are not really always fit to be “maintainable”, “testable”, “changeable”, “refactorable”, etc. etc.

But the AI models are speedy (as computers should be) and getting better at coding at an incredible speed as well. As a software engineer am playing with it, using it, running some small LLMs locally to try things out like talking to documents using RAG, and do see that the models get better at interpreting our prompts.

Being able to generate “gists” of code, even generating the unit tests to verify the code generated does shift the way engineers should look at their job; it enables us to ask more questions and if you ask me it just makes us to get closer to our customers wants, instead of closer to the code. However, developers being totally replaced? Again, doubtful. How to explain this? Well, maybe a small cartoon can show the changes in software engineering over the past decades?

And of course, we use ChatGPT to generate that cartoon!

Why future code maintenance could be harder:

- Loss of human reasoning:

When code is generated by an LLM, the rationale behind certain decisions may not be clear—especially if prompts were vague or inconsistent. Human language often is open to interpretation, so generated software from human language might suffer from the same problems! Future developers might struggle to understand why something was done a certain way. - Non-standard code patterns:

LLMs might produce creative but unconventional solutions. This could lead to code that’s harder to read, test, or integrate, especially in large teams or legacy systems. - Prompt-dependence drift:

Small changes in prompt wording can result in vastly different code. That inconsistency can create long-term unpredictability in how a system evolves. - Error amplification:

LLMs can confidently produce subtly broken or insecure code. If not reviewed closely, these flaws compound, making future debugging and refactoring more difficult. - Documentation gaps:

Unless explicitly prompted, LLMs won’t always generate thorough documentation. This makes onboarding or modifying systems harder over time.

In short:

LLMs are powerful tools, but like any automation, they still need human oversight to ensure systems are coherent, maintainable, and robust over the long haul. Developers won’t become obsolete—they’ll just evolve into curators, strategists, and explainers of increasingly machine-generated systems.

Developing, coding, refactoring, playing with the tools. Fun times ahead! And, what do you think? Feel free to leave a comment below!

References

A summary of links.

E.W. Dijkstra: On the foolishness of “natural language programming”.

Using RAG with AI (text in Dutch, but you can use your preferred browser translate of course)

Advent of code 2022: https://adventofcode.com/2022 and https://github.com/MelleKoning/adventofcode

Martin Fowler (april 2023): An example of LLM Prompting for programming

Vibe coding term coined: Vibe coding on wikipedia

Running AI models locally using openwebui and ollama via docker: https://github.com/MelleKoning/aifun/

Windsurf IDE (an IDE geared to the use of AI for coding tasks): https://windsurf.com/editor

Comparing models for coding tasks

—